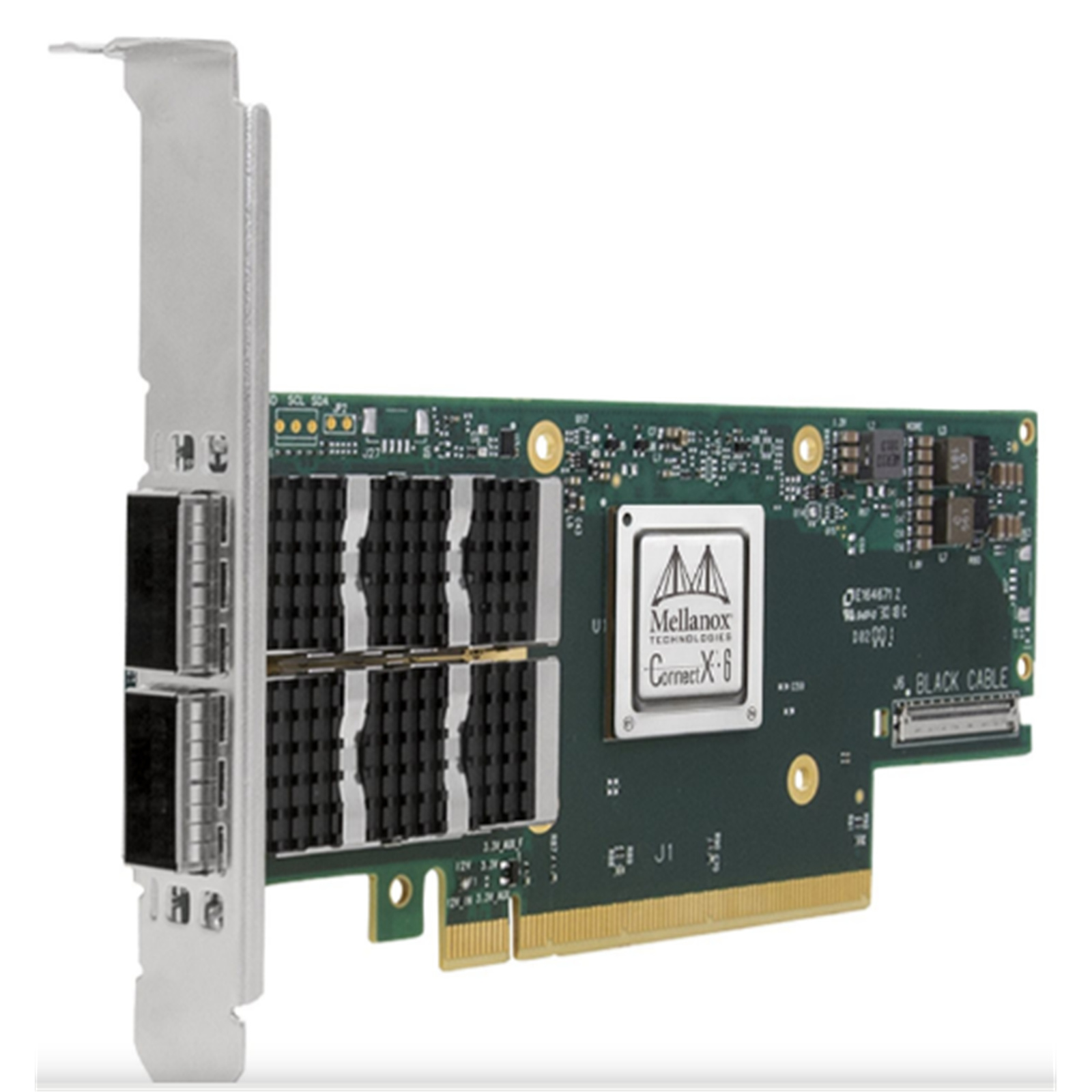

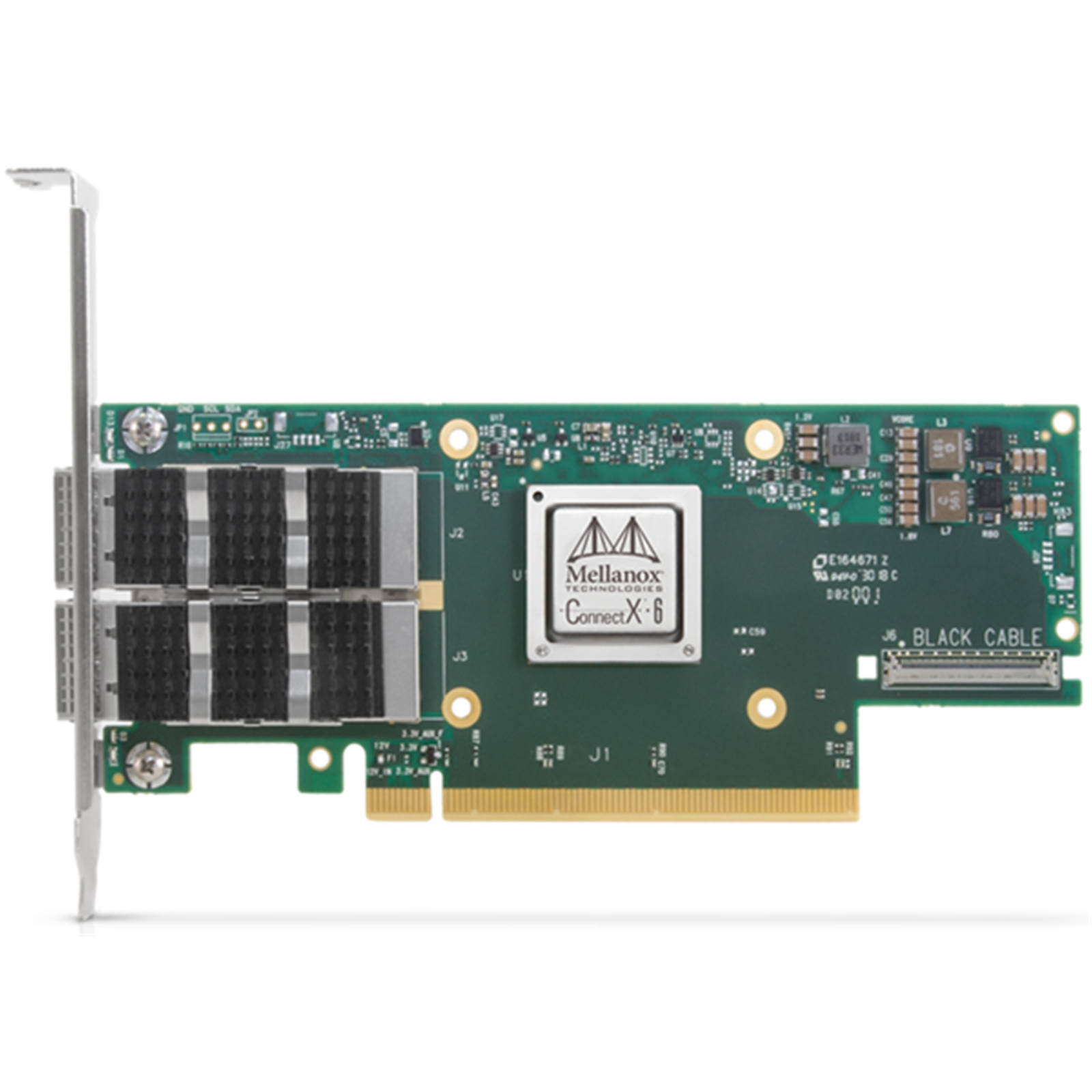

NVIDIA ConnectX-6 VPI Adapter Card, 100Gb/s (HDR100, EDR IB and 100GbE), dual-port QSFP56, PCIe 3.0/4.0 x16 Tall Bracket

Out of stock

NVIDIA ConnectX-6 VPI Adapter Card, 100Gb/s (HDR100, EDR IB and 100GbE), dual-port QSFP56, PCIe 3.0/4.0 x16 Tall Bracket

- Brand: NVIDIA

- MPN: MCX653106A-ECAT

- Part #: NICNV61201

- UPC:

- Brand: NVIDIA

- MPN: MCX653106A-ECAT

- Part #: NICNV61201

- UPC:

Features

Specifications

Reviews

Delivery & Pick-up

Returns & Warranty

Popular Network Cards (NIC)

NVIDIA ConnectX-6 VPI Adapter Card, 100Gb/s (HDR100, EDR IB and 100GbE), dual-port QSFP56, PCIe 3.0/4.0 x16 Tall Bracket

- Brand: NVIDIA

- MPN: MCX653106A-ECAT

- Part #: NICNV61201

Product URL: https://www.pbtech.co.nz/product/NICNV61201/NVIDIA-ConnectX-6-VPI-Adapter-Card-100Gbs-HDR100-E

| Branch | New Stock | On Display |

|---|---|---|

| Auckland - Albany | 0 | |

| Auckland - Glenfield | 0 | |

| Auckland - Queen Street | 0 | |

| Auckland - Auckland Uni | 0 | |

| Auckland - Newmarket | 0 | |

| Auckland - Westgate | 0 | |

| Auckland - Penrose | 0 | |

| Auckland - Henderson (Express) | 0 | |

| Auckland - St Lukes | 0 | |

| Auckland - Manukau | 0 | |

| Hamilton | 0 | |

| Tauranga | 0 | |

| New Plymouth | 0 | |

| Palmerston North | 0 | |

| Petone | 0 | |

| Wellington | 0 | |

| Auckland - Head Office | 0 | |

| Auckland - East Tamaki Warehouse | 0 | |

| Christchurch - Hornby | 0 | |

| Christchurch - Christchurch Central | 0 | |

| Dunedin | 0 |

Features

The HDR InfiniBand and Ethernet adapters for HPE ProLiant Gen10 Plus servers are based on standard Mellanox ConnectX-6 technology.

The HDR InfiniBand and Ethernet adapters are now available in the OCP 3.0 form factor with support for HPE iLO shared network port in Ethernet mode.

-Up to 200Gb/s connectivity per port

-Max bandwidth of 200Gb/s

-Up to 215 million messages/sec

-Sub 0.6usec latency

-Block-level XTS-AES mode hardware encryption

-FIPS capable

-Advanced storage capabilities including block-level encryption and checksum offloads

-Supports both 50G SerDes (PAM4) and 25 SerDes (NRZ)-based ports

-Best-in-class packing with nsubnanosecond accuracy

-PCIe Gen3 and PCIe Gen4 support

-RoHS-compliant

-ODCC-compatible

Low-Latency, High-Bandwidth InfiniBand Connectivity

HPE HDR InfiniBand adapters deliver up to 200Gbps bandwidth and a sub-microsecond latency for demanding HPC workloads.

The HPE InfiniBand HDR100 adapters, combined with HDR switches and HDR cables, are aimed at simplified infrastructure by reducing the number of required switches for a given 100Gbps InfiniBand fabric.

HPE HDR InfiniBand adapters are supported on the HPE Apollo XL and HPE ProLiant DL Gen10 and Gen10 Plus servers.

Offloading Mechanisms

HPE HDR InfiniBand adapters include multiple offload engines that speed up communications by delivering a low CPU overhead for increasing throughput.

HPE HDR InfiniBand adapters support MPI tag matching and rendezvous offloads, along with adaptive routing on reliable transport.

Benefits

Industry-leading throughput, low CPU utilization and high message rate

Highest performance and most intelligent fabric for compute and storage infrastructures

Cutting-edge performance in virtualized networks including Network Function Virtualization (NFV)

Mellanox Host Chaining technology for economical rack design

Smart interconnect for x86, Power, Arm, GPU and FPGA-based compute and storage platforms

Flexible programmable pipeline for new network flows

Cutting-edge performance in virtualized networks, e.g., NFV

Efficient service chaining enablement

Increased I/O consolidation efficiencies, reducing data center costs & complexity

Specifications

Supports

up to HDR, HDR100, EDR, FDR, QDR, DDR and SDR InfiniBand speeds as well as up to 200, 100, 50, 40, 25, and 10Gb/s Ethernet speeds

Networking

100Gb Ethernet / 100Gb Infiniband QSFP56 x 2

Expansion / Connectivity

2 x 100Gb Ethernet / 100Gb Infiniband - QSFP56

Miscellaneous

Tall bracket

Software / System Requirements

FreeBSD, SUSE Linux Enterprise Server, Microsoft Windows, Red Hat Enterprise Linux, Ubuntu, VMware ESX